| HOME | TECHNICAL INFO | BUILD | BUY | PARTICIPATE | DEMOS | BLOG | ABOUT US |

Target audience

At the first step we are targeting researchers in robotics, artificial intelligence and computer vision as well as hobby robotics enthusiasts. For this purposes, we identified and addressed the following key requirements:- should be complete open (hardware and software)

- equipped with typical and widely used set of sensors

- easy customizable to integrate new or different types of sensors and actuators

- energy efficient yet powerful on-board computer

- provide bi-directional communication link to transmit sensor and control data in real-time

- set of software modules which support distributed data processing and provide hardware abstraction layer. It should let developers concentrate on experiments and applications of their core competences

- considerably lower cost comparing to the similar available products

Chassis and body

We are using Dagu Rover 5 Tracked Chassis with two motors and two quadrature encoders. The whole body is 3D-printed and provides:- rotated "head" (to mount camera on it)

- folding mast for EMS-sensitive sensors (such as, for example, digital compass)

- place for exchangeable batteries

- "doors" to access the inside electronic connectors

- extension options for additional electronic, sensors and actuators

The first two pictures shows our current model and the last two represent rendered 3D models of the alternative bodies we are currently working on.

Sensors and on-board electronic

In the full configuration the following sensors are available:- four ultra-sound range finders (on the sides, front and back)

- two video cameras

- pan/tilt compensated digital compass

- GPS receiver

Connectivity

Robot is equipped with the IEEE 802.11 b/g/n WLAN-Adapter. Currently, we are testing the operation mode with 3G-Modem (UMTS). It is already possible (there is an available software) to remotely control the robot over the Internet using real-time video streaming from on-board camera. Of course, the autonomous operation mode is also possible. In particular, we support cloud-robotics paradigm: complex navigation algorithms could be executed on external powerful computer if the on-board computer does not provide enough performance.Software

The whole software is open-source and is available on git-hub. We offer the complete stack from operating system up to communication infrastructure and client-side user interface and visualization applications.- we provide customized image based on popular Angstrom Linux distribution which is optimized for the the BeagleBoard.

- we are using Xenomai Linux real-time extension for motor control and other time-sensitive tasks.

- sensors and actuators are remotely accessible (over the network) using corresponding software components.

- for all remoting purposes we are using ZeroC's Ice (Internet Communication Engine) middleware.

- it allows developers to use wide range of programming languages (C++, Python, Ruby, Java and all .Net-languages) to develop own software components.

- Ice also supports different communication paradigms such as for example Publisher/Subscriber, RPC-stile remote invocations, etc. It provides great flexibility for developers when designing own software components.

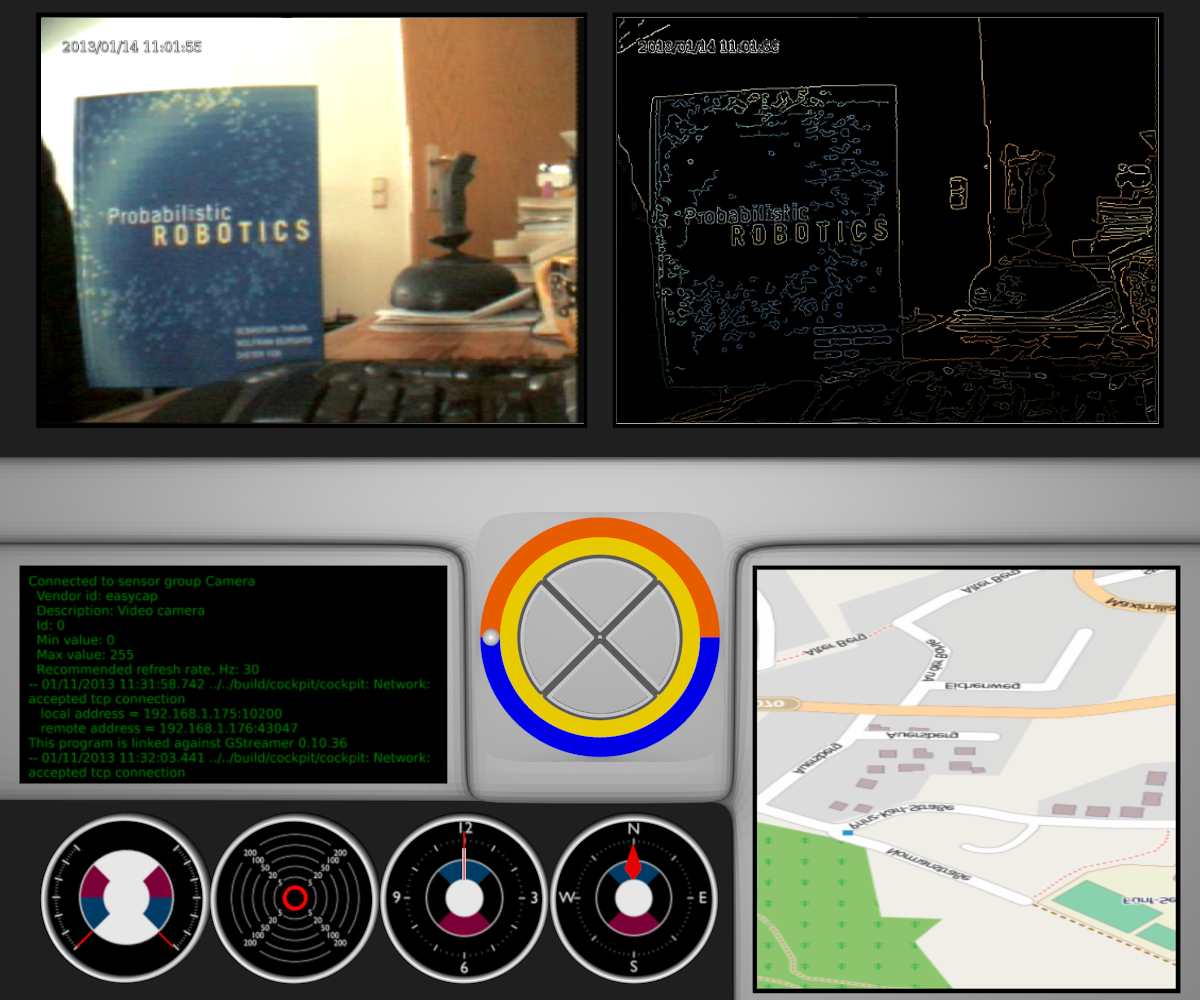

The left video panel shows the original video stream and the right one - processed with OpenCV (in this case using Canny edge detection). In the bottom, there are indicators for current motor speed, sonar measurements, compass, etc.

To demonstrate how to develop solutions for typical problems from robotics domain using our platform we implement several homework assignments from "Programming A Robotic Car" online course taught by Prof. Sebastian Thrun. These examples illustrates the applicability of our platform in educational domain. In particular, comparing to popular LEGO Mindstorms systems, our system offers more computational power and more flexible set of software building blocks to solve typical robotics problems. Cloud-robotics and distributed autonomous robotic systems are promising future directions. Our platform is the step towards this direction and our customers could benefit by reusing our software and hardware and concentrate on their areas of competence.